| << MSSQL to Cosmos DB Migrator and Containerized Blazor App Azure DevOps CI Deployment | Graph API App Webjob Azure DevOps CI Deployment Security >> |

Automatic Azure StorageSyncService Migration and SMB over QUIC Deployment

Introduction

In this post I will detail the steps for the automatic migration of a file share located in an on-premises server to the Azure cloud and enabling SMB-over-QUIC on the migrated share. Afterwards roaming users will be able to securely access the migrated share over the Internet.

To follow best security practices, the source files that deploy the resources should not contain any sensitive information, such as passwords. Instead, sensitive information is stored in Azure KeyVault. Further, the scripts use the Principle of Least Privilege to deploy all resources required for the project. For example, the cloud storage account hosting the migrated share only allows access to the on-premises network and the project's cloud virtual network.

The process consists of three steps: the deployment of the cloud resources, migrating a local share to the cloud, and setting up a cloud VM with the replicated share for SMB-over-QUIC access. I will describe the scripts that automatically execute each of these steps in sequence. I will also point out the places in these scripts where enhanced security or automating logic are taken.

The scripts are available on public GitHub repository, and all the resources are free to try with an Azure test subscription, so anyone wishing to replicate the project can do so freely.

The source code for this project can be found in this public GitHub repository:

Better-Computing-Consulting/azure-storagesyncservice-migration-smb-over-quic-deployment: Ansible playbook to deploy the resources for a Windows Server 2022 Datacenter Azure Edition with a virtual network gateway connected to a Cisco ASA Firewall on premises and a secured storage account. Two PowerShell scripts: one to deploy a StorageSyncService in azure to replicate a local share to the new storage account, and another script to setup the new Windows Server 2022 VM as a file server with a replica of the share and an SSL certificate to enable SMB over QUIC access on the public network for remote users without a VPN. (github.com)I have posted a YouTube video demonstrating the process execution from beginning to end, testing of different levels of access to the replicated cloud share from the internet.

Step 1: Deployment of Cloud Resources

The project repository contains an Ansible playbook called azresources.yml. This playbook is responsible for deploying all the cloud resources, which include a virtual network, a storage account, a file server VM, and a Virtual Network Gateway. The playbook also configures an on-premises Cisco ASA firewall to set up a VPN tunnel to the Azure virtual network. This tunnel is necessary to join the file server VM to the on-premises NT domain.

The azresources.yml playbook is based on the vpnsecure.yml playbook we developed for the Azure DevOps CI Ansible VPN Deployment Between Virtual Network Gateway and Cisco ASA project. As with the vpn-deployment project, this playbook relies on an Azure KeyVault to retrieve the secrets required for the project. The same template used to deploy the KeyVault in the vpn-deployment project can be used to deploy the KeyVault for this project. The only difference between the KeyVault used for the vpn-deployment project and this one, is that the KeyVault in this project includes, in addition to the secrets to access the Cisco ASA Firewall, an additional secret that holds the administrator password of the file server VM. Thus, to set up the KeyVault for this project first deploy the KeyVault by clicking the deploy button below,

To get the objectid for the read-only service principal account run this command:

az ad sp list --display-name ansible-service-name-here | grep objectId

To get the objectid for the admin user account run this command:

az ad user list --upn admin-user@your-domain-here_com | grep objectId

To get public IP of the server running the Azure Pipelines Agent run this command:

dig +short myip.opendns.com @resolver1.opendns.com

Once the KeyVault is deployed, add the VM administrator password secret with this AZ CLI command:

az keyvault secret set --name vmadminpw --vault-name your-vault-name --value your-private-pw

Please note the playbook is currently configured to look for a secret of name vmadminpw

Playbook execution

Once the KeyVault is ready, clone your copy of the repository (after editing its variables to fit your environment) to an on-premises Linux server with Ansible, azure.azcollection, and the cisco.asa collection installed, and execute it with this command:

ansible-playbook -i hosts azresources.yml

The Azure DevOps CI Ansible VPN Deployment Between Virtual Network Gateway and Cisco ASA blog contains detailed instructions on how to set up a Linux server with Ansible suitable for running the playbook.

When the azresources.yml runs the first step, it retrieves the secrets required for the project from the KeyVault. Then it assigns these secrets to Ansible variables:

30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 |

- name: Get project secret variables from KeyVault

azure.azcollection.azure_rm_keyvaultsecret_info:

vault_uri: "{{ keyvaulturl }}"

name: "{{ item }}"

loop:

- "ASAUserName"

- "ASAUserPassword"

- "EnablePassword"

- "VPNSharedKey"

- "vmadminpw"

register: secrets

- name: Set asa cli and vpn shared key variables

set_fact:

cli:

host: "{{ inventory_hostname }}"

authorize: yes

username: "{{ secrets.results[0]['secrets'][0]['secret'] }}"

password: "{{ secrets.results[1]['secrets'][0]['secret'] }}"

auth_pass: "{{ secrets.results[2]['secrets'][0]['secret'] }}"

vpnsharedkey: "{{ secrets.results[3]['secrets'][0]['secret'] }}"

vmadminpw: "{{ secrets.results[4]['secrets'][0]['secret'] }}"

|

Then the playbook deploys a Resource Group for all the project's resources and a Virtual Network. To this virtual network, it adds the subnet that will host the file server VM. For security purposes it adds the subnet with a Service Endpoint for Microsoft.Storage. The service endpoint will allow us to secure access to the storage account.

64 65 66 67 68 69 70 71 72 73 74 |

- name: Add subnet with Microsoft.Storage service endpoint

azure_rm_subnet:

resource_group: "{{ rg }}"

name: "{{ subnet1name }}"

address_prefix: "{{ subnet1iprange }}"

virtual_network: "{{ vnet }}"

service_endpoints:

- service: "Microsoft.Storage"

locations:

- "westus"

register: subnetinfo

|

After setting up the virtual network, the playbook captures the public IP of the on-premises network and assigns it to a variable.

83 84 85 86 87 88 89 |

- name: Get on-prem public ip

ansible.builtin.shell: "dig +short myip.opendns.com @resolver1.opendns.com"

register: shres

- name: Set on-prem public ip variable

set_fact:

asapubip: "{{ shres.stdout }}"

|

This public IP is used to secure the storage account that will host the migrated file share and to configure the VPN tunnel between Azure and the on-premises network.

The next step in the playbook deploys the storage account. The storage account does not accept connections from anywhere on the internet; it only accepts connections from the on-premises public IP and the virtual subnet created above. You can see these restrictions in the network access rules of the storage account:

91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 |

- name: Deploy storage account and configure firewall and virtual networks

azure_rm_storageaccount:

resource_group: "{{ rg }}"

name: "{{ storageaccountname }}"

type: Standard_LRS

kind: StorageV2

minimum_tls_version: TLS1_2

network_acls:

bypass: AzureServices

default_action: Deny

virtual_network_rules:

- id: "{{ subnetinfo['state']['id'] }}"

action: Allow

ip_rules:

- value: "{{ asapubip }}"

action: Allow

|

Then, the azresources.yml playbook deploys the resources required for the file server VM. These resources and the VM are configured to allow only SMB-over-QUIC connections over the internet.

First, the playbook deploys the VM public IP and creates a public DNS record for it, so clients on the internet can locate the file server.

108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 |

- name: "Create public IP address for file server VM"

azure_rm_publicipaddress:

resource_group: "{{ rg }}"

allocation_method: Static

name: "{{ vmname }}PubIP"

register: vmpubip

- name: "Add an A DNS record for {{ vmpubip['state']['ip_address'] }} and the chosen VM name and public domain {{ vmname }}.{{ dnszone }}"

azure_rm_dnsrecordset:

resource_group: "{{ dnsresourcegroup }}"

relative_name: "{{ vmname }}"

zone_name: "{{ dnszone }}"

record_type: A

records:

- entry: "{{ vmpubip['state']['ip_address'] }}"

|

Then, the playbook creates a Network Security Group that blocks all incoming traffic except for SMB-over-QUIC UDP port 443.

124 125 126 127 128 129 130 131 132 133 134 |

- name: "Create Network Security Group that allows SMB over QUIC port UDP 443"

azure_rm_securitygroup:

resource_group: "{{ rg }}"

name: "{{ vmname }}NSG"

rules:

- name: "UDP_443"

protocol: Udp

destination_port_range: 443

access: Allow

priority: 1001

direction: Inbound

|

Next, the azresources.yml playbook creates the file server's Virtual Network Interface. The new file server VM needs to be joined to the on-premises NT domain, so the IP address of the on-premises domain controller is the first DNS server of the virtual network interface.

136 137 138 139 140 141 142 143 144 |

- name: "Create vNIC with public IP"

azure_rm_networkinterface:

resource_group: "{{ rg }}"

name: "{{ vmname }}NIC"

virtual_network: "{{ vnet }}"

subnet: "{{ subnet1name }}"

security_group: "{{ vmname }}NSG"

dns_servers:

- "{{ landns }}"

|

At this point we are ready to deploy the file server VM. To avoid writing password in the playbook we use the password we retrieved from the KeyVault for the VM administrator. The playbook also adds a second data drive for the drive that will contain a copy of the replicated share. SMB-over-QUIC requires the operating system to be "Windows Server 2022 Datacenter: Azure Edition", and to lower the attack surface of the server we the playbook selects the core install option. The core install option has the added benefit of reducing the number of reboots after patching because this option allows you to enable Hot Patching.

156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 |

- name: "Create file server VM"

azure_rm_virtualmachine:

resource_group: "{{ rg }}"

name: "{{ vmname }}"

vm_size: Standard_B2s

admin_username: "{{ vmadmin }}"

admin_password: "{{ vmadminpw }}"

network_interfaces: "{{ vmname }}NIC"

os_type: Windows

managed_disk_type: Standard_LRS

data_disks:

- lun: 0

disk_size_gb: 64

managed_disk_type: Standard_LRS

image:

offer: WindowsServer

publisher: MicrosoftWindowsServer

sku: '2022-datacenter-azure-edition-core-smalldisk'

version: latest

|

After deploying the file server VM the playbook deploys the VPN tunnel between the Azure Virtual Network and the on-premises LAN. Please look at the Azure DevOps CI Ansible VPN Deployment Between Virtual Network Gateway and Cisco ASA blog for details on this part of the process.

Step 2: Migration of On-Premises File Share

After deploying the Azure infrastructure, we are ready to migrate our on-premises file share to the cloud. In this project we use Azure Storage Sync Service to migrate the file share. With Azure Storage Sync Service, we set up a Sync Group for the share to be migrated. The sync group contains a Cloud Endpoint and one or more Server Endpoints. The cloud endpoint keeps a full copy of all the files of the migrated share. The sync service can also free space on the on-premises file server (depending on the sync policies), by deleting files that have not been accessed for a long time. When a new server (without a copy of the share), is set up as a server endpoint, the entire namespace, file listing and metadata, of the share is downloaded onto the server so that users can access the share files through the new server as well. Every time a user accesses a file through the new server, the entire file is downloaded from the cloud and cashed locally on the server.

In this project the PowerShell script SourceSMBServer.ps1 included in the GitHub repository deploys the Storage Sync Service and Sync Group. The script also sets up the Cloud Endpoint and the Server Endpoint to the local, on-premises file server. The script must be run on the on-premises file server with the share to be migrated, for it installs the Storage Sync client on the local host. The script also installs all the PowerShell modules required for a successful execution.

The server running the script does need to have Git installed. The SCP command is built into PowerShell, and you can use SCP to fetch the SourceSMBServer.ps1 script from the Linux server where the project repository is cloned. For example, on a PowerShell console, this command downloads the script onto the current folder.

scp user@linuxserver:~/azure-storagesyncservice-migration-smb-over-quic-deployment/SourceSMBServer.ps1 .

Again, before running the script you should update the script variables to fit your environment, especially the local path to the share to be migrated.

SourceSMBServer.ps1 Script Execution

After installing all the required PowerShell models the SourceSMBServer.ps1 script deploys the Storage Sync Service.

20 21 |

"Deploy StorageSync Service to Azure"

$storageSync = New-AzStorageSyncService -ResourceGroupName $rg -Name $storageSyncName -Location $region

|

After deploying the Storage Sync Service, the script downloads and installs the Storage Sync client on the local host. As you can see below, the script is currently configured to run on a Windows 2019 server. If you are migrating from a different operating system, change "2019" in the line to the version of the file server operating system.

23 24 25 26 27 |

"Download StorageSync Agent"

Invoke-WebRequest -Uri https://aka.ms/afs/agent/Server2019 -OutFile "StorageSyncAgent.msi"

"Install StorageSync Agent"

Start-Process -FilePath "StorageSyncAgent.msi" -ArgumentList "/quiet" -Wait

|

Every server that will become a Server Endpoint of a Sync Group must be registered with the Storage Sync Service first. The script registers the server on the next step.

29 30 |

"Register Server in StorageSync Service"

$registeredServer = Register-AzStorageSyncServer -ParentObject $storageSync

|

Next, the script creates the Storage Sync Group.

32 33 |

"Create StorageSyncGroup"

$syncGroup = New-AzStorageSyncGroup -ParentObject $storageSync -Name $syncGroupName

|

Then, in preparation for creating the Cloud Endpoint the script adds a File Share to the Storage Account we created with the Ansible playbook azresources.yml in Step 1 of the process. Both the azresources.yml playbook and the SourceSMBServer.ps1 script have a variable with the Storage Account name, and another variable with the Resource Group name containing the storage account. The values of these variables on both files must match for the process to succeed.

35 36 |

"Add File Share to the Storage Account"

$fileShare = New-AzRmStorageShare -ResourceGroupName $rg -StorageAccountName $storageAccountName -Name $shareName -AccessTier Hot

|

Before creating the Cloud Endpoint, the script fetches the storage account as an object, so it can use storage account ID to deploy the Cloud Endpoint.

38 39 |

"Get Storage Account Created in Ansible Playbook"

$storageAccount = Get-AzStorageAccount -ResourceGroupName $rg -Name $storageAccountName

|

At this point, we are ready to deploy both the Cloud and Server Endpoints.

41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 |

"Create StorageSyncCloudEndpoint"

$cloudendpoint = New-AzStorageSyncCloudEndpoint `

-Name $fileShare.Name `

-ParentObject $syncGroup `

-StorageAccountResourceId $storageAccount.Id `

-AzureFileShareName $fileShare.Name

$cloudendpoint.ProvisioningState

"Create StorageSyncServerEndpoint"

$serverendpoint = New-AzStorageSyncServerEndpoint `

-Name $registeredServer.FriendlyName `

-SyncGroup $syncGroup `

-ServerResourceId $registeredServer.ResourceId `

-ServerLocalPath $localSharePath `

-CloudTiering -VolumeFreeSpacePercent 20

$serverendpoint.ProvisioningState

|

In both commands we output the resulting ProvisioningState to make sure the operation succeeded.

The last step on SourceSMBServer.ps1 relates to enabling SMB-over-QUIC on an additional Server Endpoint. SMB-over-QUIC must be enabled using Windows Admin Center. Windows Admin Center can be installed on any server, but for simplicity we have chosen to install it on the same host with the migrating share. Thus, the last step on the script is to download and install Windows Admin Center on the local host.

58 59 60 61 62 |

"Download Windows Admin Center"

Invoke-WebRequest 'https://aka.ms/WACDownload' -OutFile "WindowsAdminCenter.msi"

"Install Windows Admin Center"

Start-Process -FilePath "WindowsAdminCenter.msi" -ArgumentList "/quiet", "SME_PORT=443", "SSL_CERTIFICATE_OPTION=generate" -Wait

|

Step 3: Add Second Server Endpoint and Set it for SMB-over-QUIC Access.

In this step we will configure the newly deployed Windows 2022 Azure VM as an additional Server Endpoint for the replicated share's Sync Group and get it ready for SMB-over-QUIC access. The ReplicaSMBServer.ps1 in the project's repository is responsible for configuring the new VM.

In Step 1 of the process, the azresources.yml playbook set up a point-to-point VPN tunnel between the on-premises network and the Azure Virtual Network. This will allow us to manage the new Windows VM though its private IP and join the server to the on-premises NT domain. The VPN Tunnel will also allow the new VM to download the ReplicaSMBServer.ps1 PowerShell script from the same Linux server that contains the clone of the repository with an SCP command. For example, on a PowerShell console, this command downloads the ReplicaSMBServer.ps1 script onto the current folder.

scp -oStrictHostKeyChecking=no user@linuxserver.smbdemo.dev:~/azure-storagesyncservice-migration-smb-over-quic-deployment/ReplicaSMBServer.ps1 .

When we first access the server, it is still not joined to the domain, so we need the hostname, including the domain name, to be able to locate the Linux server. When azresources.yml deployed the Virtual Network Interface of the server, it uses the private IP address of the on-premises DNS server as its DNS server, so it is authoritative for the smbdemo.dev domain. The command also sets the SSH StrictHostKeyChecking option to no, so the script does not pause to ask to accept the host key. As with the other script and playbook, be sure to modify the variables to fit your environment.

ReplicaSMBServer.ps1 Script Execution

The first step of the ReplicaSMBServer.ps1 checks whether the server is joined to the domain and joins it if not already joined.

18 19 20 |

if ( $env:USERDNSDomain -ne $domain ){

Add-Computer -DomainName $domain -Restart

} else {

|

After the server joins the domain and reboots, the script next enables and formats its data disk as an F drive.

21 22 23 24 25 |

"Setup data disk as drive F"

$disk = Get-Disk | where-object PartitionStyle -eq "RAW"

$disk | Initialize-Disk -PartitionStyle GPT

$partition = $disk | New-Partition -UseMaximumSize -DriveLetter F

$partition | Format-Volume -Confirm:$false -Force

|

Next, the ReplicaSMBServer.ps1 script creates a folder in the F drive for the replicated share and shares the folder.

27 28 29 30 31 |

"Create directory for share"

new-item -path F: -name $sharename -itemtype "directory"

"Share directory"

New-SmbShare -Name $sharename -Path F:\$sharename -FolderEnumerationMode AccessBased -FullAccess $sharefullaccessgroup -ChangeAccess $sharechangeaccessgroup

|

The New-SmbShare command sets the FolderEnumerationMode option of the share to AccessBased to ensure that users can only see the folders to which they have access.

Next, the script downloads and installs the Storage Sync Agent.

33 34 35 36 37 |

"Download StorageSyncAgent.msi"

Invoke-WebRequest -Uri https://aka.ms/afs/agent/Server2022 -OutFile "StorageSyncAgent.msi"

"Install StorageSyncAgent.msi"

Start-Process -FilePath "StorageSyncAgent.msi" -ArgumentList "/quiet" -Wait

|

Then, after installing the required PowerShell modules, the script logs into Azure and registers the server with Storage Sync Service.

48 49 50 51 52 |

"Login to Azure"

Connect-AzAccount -DeviceCode

"Register Server in StorageSync Service"

$registeredServer = Register-AzStorageSyncServer -ResourceGroupName $rg -StorageSyncServiceName $syncservicename

|

Finally, the ReplicaSMBServer.ps1 script creates a Server Endpoint for the local server. The script first gets the existing Sync Group as an object, and then uses the object's properties as options for the New-AzStorageSyncServerEndpoint command. Both the ReplicaSMBServer.ps1 and SourceSMBServer.ps1 scripts have variables for the Storage Sync Service Name and Resource Group. The values of these variables must match on both scripts for the process to succeed. The script also outputs the result of the operation to make sure it succeeded.

54 55 56 57 58 59 60 61 62 63 64 |

"Get the StorageSyncGroup created in SourceSMBServer"

$syncGroup = Get-AzStorageSyncGroup -ResourceGroupName $rg -StorageSyncServiceName $syncservicename

"Create StorageSyncServerEndpoint"

$serverendpoint = New-AzStorageSyncServerEndpoint `

-Name $registeredServer.FriendlyName `

-SyncGroup $syncGroup `

-ServerResourceId $registeredServer.ResourceId `

-ServerLocalPath "F:\$sharename" `

-CloudTiering -VolumeFreeSpacePercent 20

$serverendpoint.ProvisioningState

|

At this point the new Azure VM is ready to be used as File Server for the replicated share for clients on the same Azure Virtual Network or through a VPN connection. The remaining steps on the script concern only enabling SMB-over-QUIC, which enables access to the share to on-the-road users over the Internet without a VPN connection.

One of the requirements for SMB-over-QUIC is that file server should have a valid, publicly signed SSL certificate. In our deployment we used Let's Encrypt to issue an SSL certificate for our file server and copied the certificate to the same Linux server that cloned the project repository.

Note on using Let's Encrypt to issue an SSL Certificate

To issue my SSL certificate I first set up a public DNS A record for host W202202.bcc.bz pointing the public IP of the server in which I intended to issue the certificate. Then, on the intended server, a FreeBSD machine, I ran these commands to 1) install Let's Encrypt, 2) issue the certificate, and 3) export the certificate to a pfx file.

pkg install -y py37-certbot

certbot certonly --standalone -d W202202.bcc.bz

openssl pkcs12 -export -out w202202.bcc.bz.pfx -inkey privkey1.pem -in cert1.pem -certfile chain1.pem

Lastly, on the final steps of the ReplicaSMBServer.ps1 the script downloads the SSL certificate pfx file from the Linux server and installs it on the local host.

66 67 68 69 70 71 72 73 74 |

"Get SSL Certificate as pfx file from SSH server"

$scpcommand = "scp -oStrictHostKeyChecking=no $sshuser@$sshserver$unix_path$pfx_cert_file $win_path"

Invoke-Expression $scpcommand

"Get certificate password"

$mypwd = Get-Credential -UserName 'Enter Cert password' -Message 'Enter Cert password'

"Install certificate"

Import-PfxCertificate -FilePath $win_path$pfx_cert_file -CertStoreLocation Cert:\LocalMachine\My -Password $mypwd.Password

|

At this point we are ready to enable SMB-over-QUIC using Windows Admin Center, which we installed on the original file server.

Step 4: Enable SMB-over-QUIC on the New Replica File Server.

First, open the instance of Windows Admin Center that we installed on the last step of SourceSMBServer.ps1 by browsing to:

https://sourcefileserver.localdomain.dom

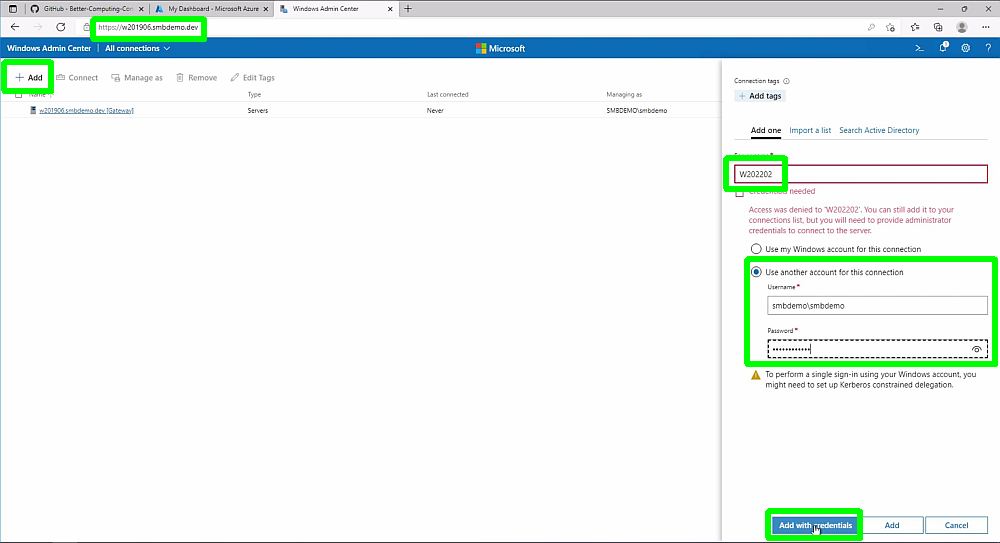

Then, add the new Azure VM file server by clicking on Add and entering the server information.

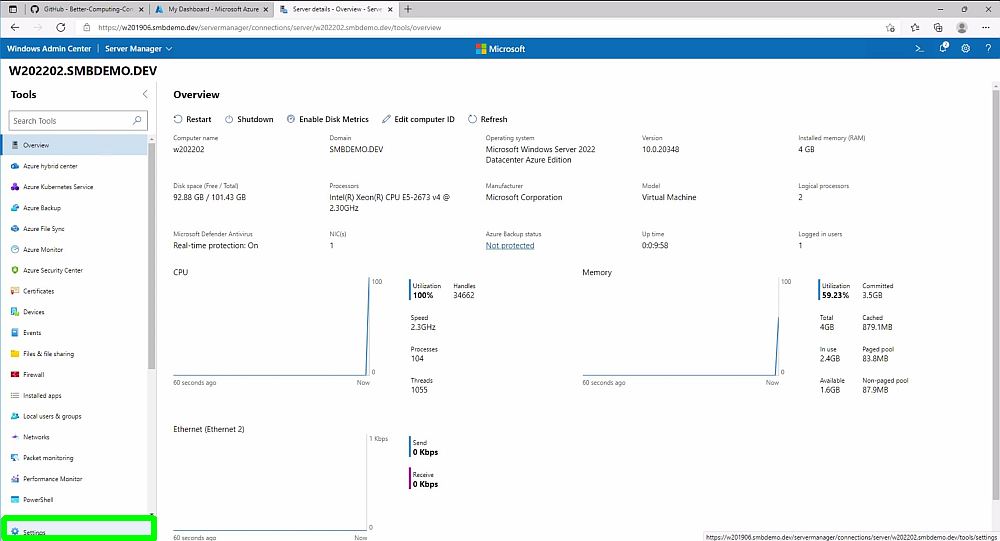

Next, connect to the server by clicking on the newly added server name and click on Settings button.

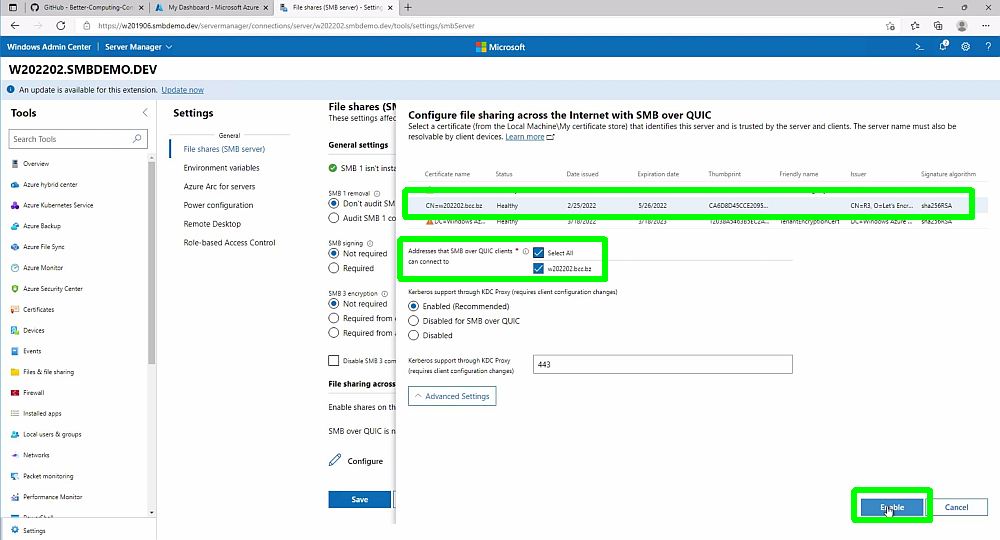

Then, under the File shares (SMB Server) section, click on Configure, Select the SSL Certificate we installed on the server on the last step of ReplicaSMBServer.ps1 and click on Enable.

That is it. Now SMB-over-QUIC is enabled, and roaming users can access the migrated file share over the Internet from anywhere in the world over UDP port 443 without a VPN connection.

The permissions from the original, migrated share are enforced when SMB-over-QUIC is used to access the share. Users who do not have access to folders will not be able to see these folders. The credentials of users that do not belong to the on-premises NT domain will be rejected.

At the end of the Youtube video included in this post I demonstrate accessing the migrated share over SMB-over-QUIC by users with different levels of access and users not belonging to the domain.

I hope you have found this post helpful. Please do not hesitate to contact Better Computing Consulting if you have any questions.

Thank you for reading.

Federico Canton

IT Consultant

Better Computing Consulting

| << MSSQL to Cosmos DB Migrator and Containerized Blazor App Azure DevOps CI Deployment | Graph API App Webjob Azure DevOps CI Deployment Security >> |